Posted in Solidus

At Super Good, we work on Solidus stores of all types: from stores with custom third-party API integrations to subscriptions to custom PDF generation systems. One common thing across all client stores is the desire to provide customers with a stable and fast experience at an operational cost that fits the budget. Improving performance and stability is often a balance of carefully optimizing code and scaling up your production infrastructure, both of which can be costly and timely endeavours. This post aims to discover if improving performance is possible without changing any code or adding extra costs to our production infrastructure. We will test the jemalloc project on a production Solidus store to see if we can improve some memory usage issues without negatively impacting response time.

Motivation

As mentioned in the introduction, we’re trying to improve our store’s memory usage without incurring the costs of code changes or extra production infrastructure. We must also verify that jemalloc has not negatively impacted application performance as measured by HTTP response time. If our application is frequently running out of memory, it may be relying on slower swap storage which will negatively impact application performance.

Background

Before understanding how jemalloc can help us reduce memory usage, we need some quick background on what a memory allocator actually does and what role it plays for Ruby apps. A memory allocator is responsible for implementing the POSIX ISO C malloc family of functions. The POSIX specification is available here. More specifically, malloc provides dynamic memory to processes like Ruby at runtime. Whenever we instantiate a new object, the running Ruby process calls malloc to allocate some memory for your shiny new object.

All Unix-based operating systems include some implementation of malloc. The GNU implementation of malloc is the most common one included with Linux distributions, but there are many others. (For example, Alpine Linux ships with musl.) jemalloc is the malloc implementation born inside the FreeBSD operating system. It’s been the default FreeBSD memory allocator since 2005 and has spread to many other operating systems, including most Linux distributions and macOS.

Is It Safe to Try in Production?

Every Solidus store must evaluate this risk on a case-by-case basis. If your Solidus store has existing stability issues unrelated to memory usage, it may be worth diagnosing those first. It’s also worth verifying we have some error reporting in place, in case anything goes wrong. At a high level, we can evaluate the risk by analyzing how much C code is present in our Ruby app. Most Solidus stores will include some C extensions for the pg or mysql gems, but these gems are known to work well with jemalloc. If your Solidus app has no custom C extensions, it’s reasonably safe to try jemalloc locally or in a staging environment.

Enabling Jemalloc

Let’s give this a try!

Local Ruby builds

-

Start by installing

jemallocwith your package manager of choice (e.gbrew install jemallocon Mac OS). -

Pass the

--with-jemallocflag to the Ruby installer of your choice. We include a snippet forruby-installandruby-build.# Works with ruby-install or ruby-build ruby-install --install-dir=~/.rubies/ruby-3.1.3-jemalloc ruby-3.1.3 -- --with-jemalloc ruby-build --install-dir=~/.rubies/ruby-3.1.3-jemalloc ruby-3.1.3 -- --with-jemalloc # asdf RUBY_CONFIGURE_OPTS="--with-jemalloc=/usr/local/opt/jemalloc" asdf install ruby 3.1.3

Note that asdf users on Apple silicon Macs report some additional steps are required.

On Heroku

- Install the Heroku jemalloc buildpack.

- Set the environment variable

JEMALLOC_ENABLEDtotrue.

On fly.io

- Update

config/dockerfile.ymlsojemallocis set to true.options: bin-cd: false cache: false ci: false compose: false fullstaq: false jemalloc: true mysql: false parallel: false platform: postgresql: true prepare: true redis: true swap: yjit: false label: {} nginx: false - Call

fly deployto deploy your changes.

Production Testing Analysis

Today we’re looking at a Solidus 3.1.6 app running on Unicorn, Ruby 2.7.8, and Heroku Professional dynos. We are trying to address the repeated memory usage errors (R14) being reported by Heroku metrics without negatively impacting response time or throughput. If we cannot reduce memory usage, we may need to consider paying more to upgrade our dynos memory quota, which is currently 2,560MB. This app is running a web and worker dyno, but we focus on the web dyno running the Unicorn server. To enable jemalloc on this app, we add the jemalloc buildpack, then set the environment variable as described above. This change will impact the web and worker dyno, so be sure to check on both dynos.

Memory Usage

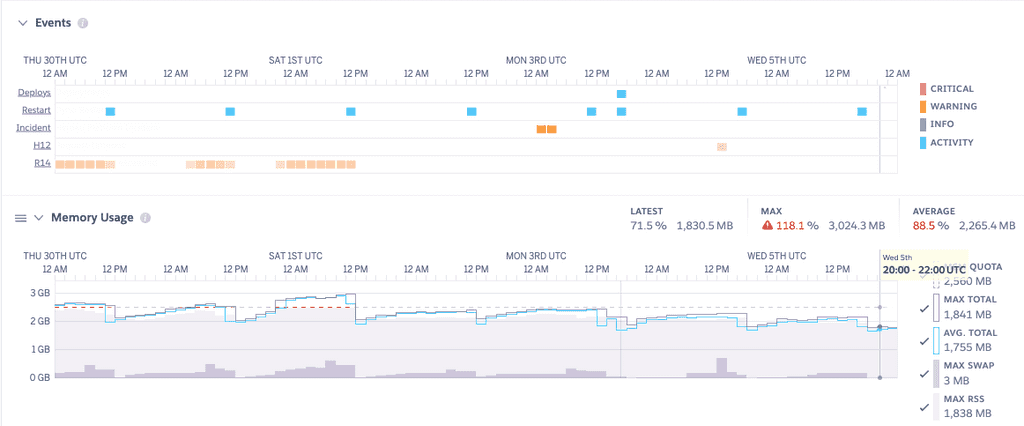

We start by reviewing the Heroku Metrics for memory usage on our web dyno. For this analysis, we use a 7-day window on the metrics data to provide a meaningful average over the business week.

Memory usage metrics with glibc malloc

Memory usage metrics with glibc malloc

Our average memory usage is still under the quota of 2,265.4MB or 88.5%. However, our maximum memory usage is far over the quota at 3,024.3MB or 118.1%. Each time our application runs into one of these R14 errors, our application uses much slower swap memory, so we want to eliminate these R14 errors.

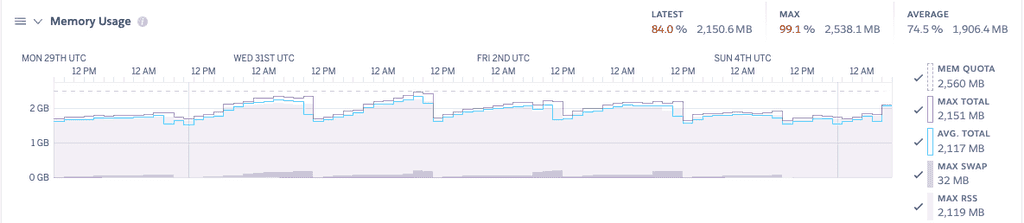

Memory usage metrics with jemalloc

Memory usage metrics with jemalloc

After enabling jemalloc, we can see an instant improvement in our memory usage. We are still quite close to the quota at 2,5381MB or 99.1%, but staying under our quota is enough to eliminate the R14 errors and avoid relying on slower swap memory.

| glibc | jemalloc | % improvement | |

|---|---|---|---|

| Average Memory Usage | 2,265.4MB | 1,906.4MB | 15.85% |

| Maximum Memory Usage | 3,024.3MB | 2,538.1MB | 16.08% |

This looks promising! Next, let’s make sure we’re moving in the right direction with response time.

Response Time

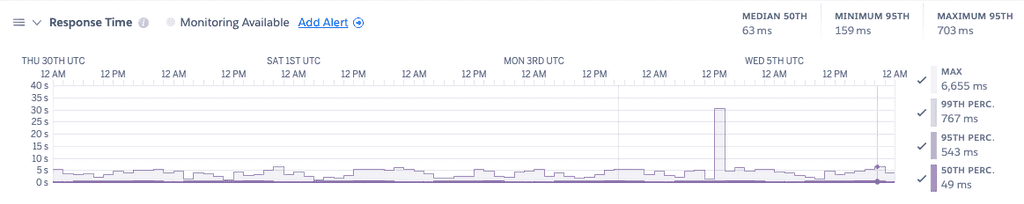

Like the memory usage analysis, we use Heroku metrics over a 7-day period to analyze response time.

Response time metrics with glibc malloc

Response time metrics with glibc malloc

While the median 50th percentile response time, minimum 95th percentile, and maximum 95th percentile look okay, we can see some large peaks on the graph, so much so that our legend on the y-axis goes all the way to 40 seconds! We can see one large peak on the right-hand side that clocks in at 30 seconds response time. However, this result is deceptive, as we can easily correlate this with a Heroku incident.

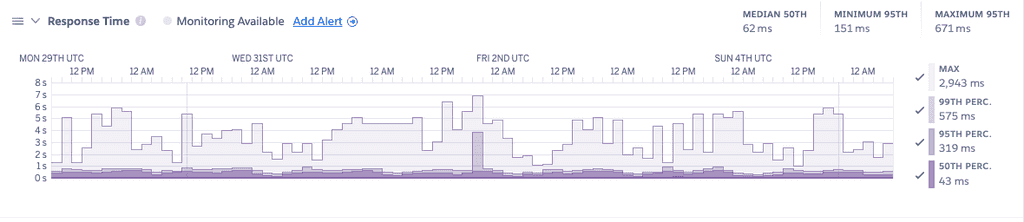

Response time metrics with jemalloc

Response time metrics with jemalloc

| glibc | jemalloc | % improvement | |

|---|---|---|---|

| Median p50 | 63ms | 62ms | 1.59% |

| Minimum p95 | 159ms | 151ms | 5.03% |

| Maximum p95 | 703ms | 671ms | 4.55% |

Overall jemalloc had a negligible impact on response time for this particular app. That being said, getting this app under the memory usage quota without negatively impacting response time is still a win!

Conclusions

The following table summarizes our findings:

| glibc | jemalloc | % improvement | |

|---|---|---|---|

| Average Memory Usage | 2,265.4MB | 1,906.4MB | 15.85% |

| Maximum Memory Usage | 3,024.3MB | 2,538.1MB | 16.08% |

| Median p50 | 63ms | 62ms | 1.59% |

| Minimum p95 | 159ms | 151ms | 5.03% |

| Maximum p95 | 703ms | 671ms | 4.55% |

If your application frequently runs out of memory, try jemalloc. As Nate Berkopec said, “There are no free lunches. Though this one might be close to free. Like a ten cent lunch”. The improvements seen in this post are relatively minor, but overall we managed to stop our application from running out of memory and improved performance in the process.